Seoul 2018

Mobile & Beyond

Agenda

Click on the sessions and demos below to view the content.

19 October 2018

-

8:52 | Welcome

Soheil Modirzadeh, MIPI DevCon Chairman, kicked off the day with a brief welcome and introduction of the keynote speaker.

-

08:57 | Keynote: Mobile Technologies for a Smart World

View the presentation slides »

Listen to the audio recording »

The fast revolution taking place in the mobile world is impacting our day-to-day lives, making this an exciting time considering the widespread use of smart devices. For example, 5G technology is creating a new revolution in mobile applications. This millimeter wave frequency spectrum for future cellular communication networks provide an affordable broadband wireless experience. In addition, ADAS technologies for automotive and IoT devices are also improving peoples' lives.

This presentation focuses on these kinds of future technologies with MIPI's interface evolution.

Presenter:

Jongshin Shin received his bachelor of science, master's and doctorate degrees in electronic and electrical engineering from Seoul National University, Seoul, Korea, in 1997, 1999 and 2004 respectively. He joined Samsung Electronics, Hwasung, Korea, in 2004, as a member of the technical staff and currently is a vice president working on serial link design for mobile and home applications.

-

09:17 | MIPI State of the Alliance

View the presentation slides »

Listen to the audio recording »

Peter Lefkin, MIPI's managing director, offers an update on MIPI's key initiatives and the latest working group activities.

Presenter:

Since 2011, Peter Lefkin has served as the managing director and secretary of MIPI Alliance, having returned to the role after leading the establishment of its operational support structure at its inception in 2004. In his role, Peter is the senior staff executive responsible for all MIPI Alliance activities and operations from strategy development to implementation.

-

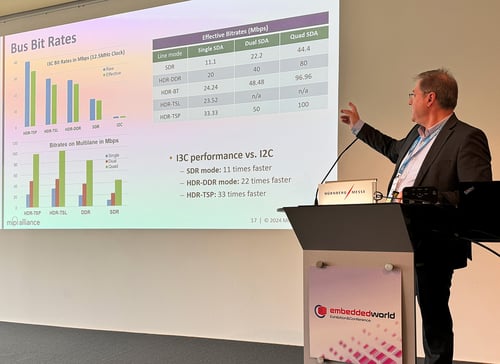

09:52 | Evolving MIPI I3C for New Usages and Industries

View the presentation slides »

Listen to the audio recording »

This presentation focuses on the efforts that MIPI is taking to evolve I3C's capabilities and access, via I3C℠ v1.0, I3C℠ v1.1 and I3C℠ Basic, to allow broad, long term adoption across multiple industries and usages – some of which weren't necessarily foreseen at the initial formation of MIPI's Sensor Working Group.

Presenter:

Ken Foust is a sensor technologist at Intel Corporation, where he leads the development of sensor solutions across Intel's entire product portfolio and R&D labs. In this capacity, he also works closely with the sensor industry and its consortiums, driving initiatives in inertial sensing, environmental sensing, bio-sensing and sensor standardization. Ken was awarded the Best of Sensors Expo 2013 Engineer of the Year Award for his recent standardization efforts within the sensor industry.

Before joining Intel, Ken held many positions, including director of product engineering at Kionix Inc., in Ithaca, N.Y., and he started his career at IBM Microelectronics (Essex Junction, Vt) as a product engineer. He received a bachelor of science in electrical engineering from Alfred University.

-

10:37 | MIPI Alliance Meets the Needs of Autonomous Driving

View the presentation slides »

Listen to the audio recording »

It is widely expected that future automobiles, especially those featuring Autonomous Driving Systems, will see significant increases in the number of surround sensors, including radar, lidar and cameras. At the same time, it is also expected that improvements in resolution and dynamic range will transform these sensors into very high-rate data sources. Similarly, future vehicles are projected to include multiple high-definition displays, which can be thought of as very high-rate data sinks. The transport of these very high-rate data streams from sensors or to displays is a technical challenge for which MIPI Alliance brings deep expertise from developing similar (C-PHY, D-PHY, CSI-2, etc.) interface standards supporting the mobile handset market.

MIPI Alliance has spent the past year gathering/agreeing on requirements, including studying the effects of functional safety (ISO-26262) and other issues unique to automotive. MIPI has also begun the process of creating specifications for the physical layer of a design that can meet these requirements. This presentation attempts to provide attendees with a status update on MIPI's efforts to support the automotive market.

Presenter:

Matt Ronning is the director of engineering for Sony’s Component Solutions Business Division located in San Diego, Calif. He manages a small engineering team that provides both application and system engineering support for a diverse set of products, including GPS, bluetooth, MMIC devices, power stages, etc. Previous Sony assignments have included managing Sony’s automotive camera business for North America and new business development, with a special focus on the automotive market.

Currently Matt is the chair of the MIPI Alliance’s Automotive Working Group, which is chartered to extend MIPI specifications into the automotive market. His educational background includes an MSEE specializing in communications systems from Arizona State University and a BSEE from the California Institute of Technology.

-

11:22 | MIPI – Making the 5G Vision a Reality

View the presentation slides »

Listen to the audio recording »

The mission of MIPI Alliance is to develop the world’s most comprehensive set of interface specifications for mobile and mobile-influenced products. MIPI Alliance has always been at the forefront of defining state-of-the-art low power interconnects to support the key subsystems in advanced mobile platforms. With the 5G wave coming like a tsunami, it will start with mobile and expand into all areas of our life. 5G needs a set of high-performance and scalable system interconnects to meet the 5G era for the next decade and beyond. MIPI is up to the challenge and prepared for the coming 5G demands.

To ensure MIPI meets or exceeds the requirements of next generation 5G mobile or beyond-mobile use cases, all working groups are evaluating their roadmaps to evaluate implications of future 5G technologies and emerging 5G use cases.

This presentation focuses on how current and future MIPI specifications will be used to achieve our goal of “MIPI – Making the 5G Vision a Reality”.

Presenters:

Kenneth Ma is director of technology planning at Huawei and is the vice president and director of CCIX Consortium. He has 30 years of industry experience and has been involved in several industry standard organizations, including MIPI Alliance, CCIX Consortium, JEDEC, USB-IF, PCI-SIG(R) and Gen-Z Consortium.

Kenneth has contributed to the MIPI Camera, Display/Touch, LLI and UniPro specifications and served as a MIPI technical liaison, driving collaboration of the DSI℠ v1.2 and VESA DSC display stream compression standards. Kenneth is the recipient of the MIPI 2014 Special Achievement Award for his contributions.

Kevin Yee is technical business development director at Cadence Design Systems Inc. Within this role, he has driven system design enablement and ecosystem partnerships, developing comprehensive system solutions in key segment markets such as high-performance compute, automotive, internet of things and mobile applications.

With more than 25 years in the semiconductor industry, Kevin's background includes system/SoC/ASIC design, FPGA architecture and IP development, and he holds several patents on design architecture. He has been involved with a number of industry standards organizations, including PCI-SIG, CCIX, USB I/F, JEDEC and MIPI.

-

13:07 | Dual Mode C-PHY/D-PHY Use in VR Display IC

View the presentation slides »

Listen to the audio recording »

This Synaptics and Mixel co-presentation covers Synaptics VXR7200 DisplayPort to Dual MIPI VR Bridge IC, integrating a Mixel C-PHY℠/D-PHY℠ Combo IP and controller. This is the world’s first announced product to support display applications using both MIPI C-PHY and D-PHY. The MIPI PHY supports aggregate data rate of up to 10 Gbps in the D-PHY mode, and 17.1Gbps in the C-PHY mode. The Synaptics R63455 VR Display Driver IC is a companion chip that drives displays using special VR display timing modes.

The Synaptics IC is optimized for VR, AR, and MR dual displays headsets with resolutions up to 3Kx3K @ 120Hz with DSC compression. The MIPI interface can operate either in single-link mode, or dual-link mode.

The presentation covers the diagrams of the Synaptics IC and the Mixel IP and also shows silicon characterization results.

Authors: Ashraf Takla, president and CEO, Mixel, Inc. & Jeffrey A Lukanc, senior director for IoT video products, Synaptics

Presenters:

Ahmed Ella has been working for Mixel since 2011, where he heads Mixel's Engineering team in Egypt. Prior to that he worked at ICpedia and Mentor IP division, managing teams working on serial link products such as HDMI, USB, and Multi standard SERDES. Ella has 18 years of mixed-signal design and management experience. He holds a BSEE from Ain Shams University, and MSEE from Cairo University in Egypt.

Jeff Lukanc has worked at Synaptics for more than six years managing discrete touch and video interface product lines. Previously, he worked at IDT, managing the DisplayPort video product family, and also was director of engineering for PCI Express and MIPS SoC product lines. He has a BSEE from the University of Illinois, MSEE from SMU, and MBA from the University of St Thomas.

-

13:37 | Powering AI and Automotive Applications with the MIPI Camera Interface

View the presentation slides »

Listen to the audio recording»

Designers are reaping the benefits of the MIPI Camera Serial Interface (CSI-2℠) in applications beyond mobile, including automotive and artificial intelligence. Cameras are used in a multitude of functions from radar, LIDAR, and sensors in Advanced Driver Assistance Systems (ADAS) to machine vision in artificial intelligence. The MIPI camera interface enabling such functions must adhere to stringent automotive standards, while helping designers to meet their low-power and high-performance requirements. For artificial intelligence SoCs, the MIPI camera interface allows real time data connectivity between sensors and processors.

This presentation mainly focuses on describing the MIPI camera interface’s different use cases and implementations in automotive and AI applications, and details the interface’s advantages for each use case. In addition, the presentation briefly describes the MIPI I3C℠ specification’s benefits, as a key part of the MIPI camera solution.

Presenter:

Hyoung-Bae Choi (HB Choi) is a field application engineer manager at Synopsys Korea. HB is responsible for helping users easily integrate Synopsys interface IP solutions such as USB, PCI Express, DDR, MIPI and Ethernet in their SoCs targeting mobile, digital TV, wireless connectivity, storage, and networking applications. Prior to Synopsys, HB worked as an SoC design engineer and architect in the area of mobile, LTE-modem and media-processor at Samsung and LGE.

-

14:37 | Integrating Image, Radar, IR and TOF Sensors: Developing Vision Systems with Dissimilar Sensors

View the presentation slides »

Listen to the audio recording »

Vision and vision sensors play an ever expanding role in pretty much every field of electronics, including security, industrial automation, medical equipment, virtual reality (and augmented and mixed reality), automobiles, and drones – as well as many more. In many of these applications, multiple, often dissimilar, sensors are needed such as, image, radar, IR and time of flight (TOF) etc.

As an example, consider the architecture of an automobile with ADAS features (drones, AR/VR all have similar challenges). The tasks of surround view, parking assistance, traffic sign recognition, emergency breaking, collision avoidance, cross traffic alert, blind spot detection, cruise control and forward vehicle detection are handled by a variety of image sensors, lidar and radars. Application processors typically do not have enough IOs to support this many sensors. A method is needed to aggregate these multiple signals into fewer streams of data.

MIPI CSI-2℠ virtual channels provide the ability to uniquely designate data types and sensor sources within a single CSI-2 video stream. This enables a single video stream to transport video, data and metadata from a variety of sensors. This in turn will minimize the need for multiple cables transmitting information over a long distance, in addition to minimizing the physical IO connections to application processors.

This presentation discusses the architectures and tradeoffs in mission critical vision applications. It also covers the details of combining and tagging multiple data streams, including the Camera Control Interface (CCI) integration.

Presenter:

Tom Watzka is the technical mobile solutions architect at Lattice Semiconductor with more than 20 years of experience developing embedded products, including seven years developing consumer mobile solutions. Currently, Watzka is the marketing product manager for the CrossLink video bridge product line, focused on mobile and mobile influenced markets. He received his bachelor of science degree from the Rochester Institute of Technology and his master's degree from Pennsylvania State University, where he conducted his master’s thesis on FFT algorithms.

-

15:07 | High-Performance VR Applications Drive High-Resolution Displays with MIPI DSI

View the presentation slides »

Listen to the audio recording »

Analogix's DisplayPort to MIPI DSI℠ controller for VR HMDs powers today’s tethered VR headsets in various configurations with distinct benefits and performance levels, providing high-speed DisplayPort interface to connect to the GPU VR content source, while the MIPI DSI interface offers up to 16 DSI lanes partitioned over four ports for flexibility.

Presenter:

Miguel Rodriguez has more than 15 years of experience in high-speed input/output (I/O) technologies deployed in networking, server and storage enterprise markets, as well as high-speed display technologies in mobile and AR/VR applications. At Analogix, Miguel is responsible for the VR head-mounted display controller family of products, which include DisplayPort to MIPI DSI display controllers, currently being used in a variety of high-performance VR head-mounted display products.

-

15:52 | Troubleshooting MIPI M-PHY Link and Protocol Issues

View the presentation slides »

Listen to the audio recording »

The M-PHY® specification is designed to allow mobile devices to have a low-power, high-performance interface. Several higher level protocols use the M-PHY physical layer for storage, I/O and memory in mobile devices.

The M-PHY® specification is designed to allow mobile devices to have a low-power, high-performance interface. Several higher level protocols use the M-PHY physical layer for storage, I/O and memory in mobile devices.This presentation discusses how higher layer protocols, including UniPro® and UFS, use the M-PHY physical layer to provide an efficient, low power storage protocol to be enabled on mobile platforms. It also covers debug and analysis techniques for UFS and UniPro technologies to allow root-cause analysis to be performed in an efficient and effective manner.

Presenters:

Gordon Getty is a senior application engineer at Teledyne LeCroy. He has worked with high-speed serial technologies for more than 15 years, including D-PHY℠ and MIPI M-PHY® Technologies, PCI Express and NVMe. Gordon has developed numerous test specifications and test procedures for testing PCI Express and other technologies. He graduated from Glasgow University in Scotland with a bachelor’s degree in electronics and music, and a master of science in information technology from the University of Paisley in Scotland.

Gordon Getty is a senior application engineer at Teledyne LeCroy. He has worked with high-speed serial technologies for more than 15 years, including D-PHY℠ and MIPI M-PHY® Technologies, PCI Express and NVMe. Gordon has developed numerous test specifications and test procedures for testing PCI Express and other technologies. He graduated from Glasgow University in Scotland with a bachelor’s degree in electronics and music, and a master of science in information technology from the University of Paisley in Scotland.Juhyun Yang is a senior sales engineer for Teledyne LeCroy in South Korea. Juhyun has 20 years of experience in Protocol Test Tools for high-speed protocols, including PCI Express, USB, SAS/SATA and MIPI/UFS. He also works to promote testing methodologies for future technologies.

-

16:37 | Next Generation Verification Process for Automotive and Mobile Designs with CSI-2 Interface

View the presentation slides »

Listen to the audio recording »

MIPI CSI-2℠ specifications are well adopted and are integrated in many mobile complex systems. In recent years, MIPI CSI-2 specifications have been adopted for automotive systems as well. The different characteristics of these systems, such as increased number of interfaces and system complexity, require developing a state-of-the-art verification methodology to address this complexity, usually under an aggressive timeline.

MIPI CSI-2℠ specifications are well adopted and are integrated in many mobile complex systems. In recent years, MIPI CSI-2 specifications have been adopted for automotive systems as well. The different characteristics of these systems, such as increased number of interfaces and system complexity, require developing a state-of-the-art verification methodology to address this complexity, usually under an aggressive timeline.This presentation focuses on presenting methodology for a full verification flow, from the IP level to the SOC level, based on advanced verification platforms of simulation, prototyping, emulation and virtual emulation.

Presenters:

Thierry Berdah is a director of software engineering at Cadence Design Systems. He manages the Verification IP (VIP) product line for MIPI, Ethernet and DisplayPort, which helps semiconductor companies shorten their time-to-market and perform superior protocol compliance verification for their designs. Thierry has actively developed and led many verification projects for major companies in diverse areas such as ethernet, PCIe, HDMI and DP, for more than 15 years. Thierry earned his BSc degree in communication systems engineering from Ben-Gurion University in Israel and his MBA from EADA Business School in Barcelona.

Yafit Snir is currently leading the development of MIPI verification IPs aimed for emulation at Cadence. She has more than 16 years of experience in hardware design and verification, and software development. She has bachelors' degrees in electrical engineering and in computer science, both from Tel Aviv University, and a master's in electrical engineering, specializing in evolutionary algorithms. She also co-authored the publication “Tailoring ε-MOEA to Concept-Based Problems,” part of the Lecture Notes in Computer Science book series, Springer Link, (volume 7492).

Photos & Videos

-

Highlights VideoSee the event highlights video.

-

Event PhotosSee all of the event photos.