9 min read

MIPI Sessions at the 2025 embedded world Exhibition & Conference

![]() MIPI Alliance

:

01 November, 2024

MIPI Alliance

:

01 November, 2024

- Resources

- Conference Presentations

The 2025 embedded world Exhibition & Conference was held 11-13 March in Nuremberg, Germany, connecting researchers, developers, industry and academia from all disciplines of the embedded world. MIPI once again served as a Community Partner for the event.

MIPI specifications were the focus of a dozen presentations, including three multi-session tracks dedicated to MIPI I3C, MIPI specifications for embedded vision, and MIPI interfaces for display, storage and audio. In addition, MIPI-related presentation were included within the hardware for edge AI track and at the co-located Electronic Displays Conference.

All MIPI-related presentations are available to download below.

MIPI I3C Serial Bus

An Introduction to MIPI I3C, the Next-Generation Serial Bus Enabling Industrial Applications

Michele Scarlatella, MIPI Alliance

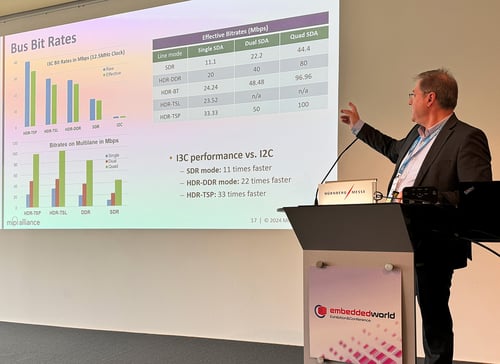

MIPI I3C® (and the publicly available MIPI I3C Basic™) is a general purpose, two-wire serial bus specification for connecting peripherals to microcontrollers. Designed as the successor to I2C, it supports numerous innovative features that build upon the key attributes of the I2C, SPI and UART interfaces. As a result, MIPI I3C provides a high-performance, low-power, low-pin count upgrade path and makes an ideal interface for numerous applications, including sensor control and data transport, memory sideband channel, “always-on” imaging, server system management, debug communications, touchscreen communication and power management.

The presentation provides an overview of the I3C interface, covering:

- I3C bus configuration and roles, including primary controller, secondary controller, I2C target, bridging devices and routing devices

- The relationship between the I3C and I3C Basic specifications

- Features including double the data rate and multi-lane capability

- A description of the main bus transactions and common command codes

- Use of dynamic addressing to tie an address to a function of a device, simplifying system management and software drivers

- Efficient data acquisition with in-band interrupts

- Use of hot-join to attach devices after the I3C bus is configured

Best Practices for Smooth Adoption of the MIPI I3C Interface

Martin Cavallo, Binho LLC

The MIPI I3C serial interface is being increasingly adopted in embedded systems due to its advanced feature set compared to legacy interfaces. During its initial adoption, several implementation challenges surfaced, particularly when using early versions of the specification. To address these challenges, MIPI recently released a new revision of the specification, MIPI I3C v1.2.

This talk focuses on the challenges that engineers and developers faced when integrating I3C into their projects, especially when trying to integrate devices supporting different I3C versions, or mixed buses with I2C devices also connected. Drawing from real-world experiences, it addresses the common implementation challenges developers have encountered and highlight how the newest version of the specification, I3C v1.2, addresses these issues and streamlines the protocol for easier integration. The presentation covers key updates in the specification, and suggests best practices for device orchestration, interoperability and backward compatibility. The goal is to provide actionable insights to improve the implementations of I3C and shed light on potential pitfalls and best practices for smoother adoption of the interface. Additionally, some powerful and disruptive features of I3C, such as group addressing and virtual targets, are described to engage system designers and explore the different options I3C can provide to make systems simpler and more efficient.

Enabling Next-Generation Embedded Vision Systems with MIPI I3C

Marie-Charlotte Leclerc, STMicroelectronics

Recent advances in AI and semiconductors have driven a rapid growth of CSI-based embedded vision systems, from delivery robots and action cameras to mobile barcode scanners. Yet, optimization in size, weight, power and data is still insufficient for some smart vision applications or for simply reaching long enough battery life in various products.

This presentation explores how MIPI I3C offers a practical solution to meet the requirements of next-generation embedded vision systems. It begins with an overview of the latest innovations in imaging and processing solutions, highlighting the critical importance of achieving synergy between these domains for embedded vision.

The recent announcement by STMicroelectronics of the first-ever image sensor with I3C output paves the way for optimized embedded vision systems utilizing microcontrollers. Transitioning from theory to practice, this presentation offers a comprehensive MIPI I3C reference design, its characteristics and use cases, and demonstrates how this architecture can facilitate smart features such as auto-wake up and event imaging.

To empower attendees, practical tips and resources are shared, enabling both experts and beginners to swiftly adopt MIPI I3C vision architecture. The presentation concludes with a forward-looking perspective on upcoming innovations, broadening the adoption of embedded vision systems across more demanding use cases and driving the development of even more optimized solutions.

MIPI for Embedded Vision

Empowering Autonomous Driving: The Impact of MIPI CSI-2 on Advanced Sensor Technologies

Simon Bussieres, Rambus

The pursuit of autonomous driving is accelerating the widespread adoption of sensors such as cameras, LIDAR, radar and ultrasound. While significant progress is being made toward fully autonomous vehicles, Advanced Driver Assistance Systems (ADAS) are becoming increasingly sophisticated by leveraging these sensors. These devices capture vital information about the vehicle’s surroundings, which is then processed and interpreted to provide real-time assistance to drivers. As sensor resolution improves, the data that must be transmitted to processing or display subsystems grows, demanding predictable interfaces with high bandwidth, low power consumption and low latency. This session explores how MIPI CSI-2® technology addresses these challenges, enabling efficient data transmission for ADAS applications.

Machine Vision Processing of Event-based Sensor with MIPI Interface on FPGAs

Satheesh Chellappan, Lattice Semiconductor

Current industrial AI processing uses frame-based camera (FR) with full raster images and are limited by number of frames per seconds (FPS) it can provide to AI processing engine. Most AI application involves detecting anomalies or predictive maintenance in factory production line or moving AMR (autonomous mobile robot). Some applications with fast moving objects need inference >60 FPS and reduce motion blur, which is very difficult for legacy FR sensors with 30-60 FPS based on the resolution and bandwidth. Some requirements are 1K to 100K FPS (1ms to 10us latency), which can only be satisfied with event-based (EV) sensors.

This presentation discusses an architecture to run inference on FPGA using EV and FR sensors, support high inference rate and reduce the motion blur in high dynamic moving objects. It also shows blocks utilizing CNN engine, compare & contrast the NN model between image and event data, and reuse the processing pipeline for both type of sensors. In some applications, combining EV image and traditional FR image is optimal. FPGA provides the flexibility to adapt to upcoming EV sensors, and the evolution of standard specification within MIPI CSI-2 protocol. The architecture showcases existing interface like LVDS, and/or MIPI D-PHY™ interface for existing FR sensors. With FPGA flexibility and the form-factor advantage from EV sensors, there is balance bringing these solutions for space-constrained applications, while the eco-system evolves and standardizes.

Addressing the Challenges in the Medical Industry’s Transition to Disposable Endoscopes

Jonathan Regalado-Hawkey, Valens Semiconductor

For years, the FDA has been urging the medical industry to transition to single-use disposable endoscope architecture. For good reason – patients have gotten sick, and patients have died, due to contamination from improperly cleaned endoscopes. Not to mention, the cleaning of the endoscopes takes time and resources, and utilizes hazardous materials.

So what is hindering the transition to disposable architecture? The lack of a SerDes solution that can both minimize the size, form factor, cost and power consumption of the serializer while maintaining signal integrity through significant distortions caused by electrosurgical equipment. However, the MIPI Alliance has introduced a standard technology, A-PHY, which bears no such limitations. A-PHY includes built-in electrosurgical noise cancellers, profiled to the medical use case. Further, the standard enables small, low-power serializer chip – a perfect fit for the industry’s transition to disposable.

MIPI Interfaces

Genius in Simplicity: A Practical Guide to Connecting DSI-2 Display Panels to Microcontrollers in Embedded Applications

Mohamed Hafed, Introspect Technology

With more and more microcontrollers supporting MIPI DSI-2™ as their main display interface, many embedded system developers are attracted to cost-effective DSI-2 display panels instead of more expensive HDMI or DisplayPort panels. After all, DSI-2 supports breathtaking resolutions and frame rates while consuming very little power and with the lowest-latency protocol layer. However, there is a perception among some developers that the DSI-2 physical later interfaces (D-PHY and C-PHY) are not able to drive cabled display applications, meaning implementers often revert to using more expensive display panels and more cumbersome protocols.

The goal of this presentation is to evaluate DSI-2 from the perspectives of Tx performance, Rx performance and channel performance. The presentation shares cable measurements, obtained from real-world implementations, illustrating how system implementations can use DSI-2 panels with cables as long as the electrical properties of the specifications are met. After establishing how a suitable interconnect selection can be made for DSI-2, the presentation delves into the advantages of DSI-2 at the protocol layer, describing the most innovative features, whether they be for high-performance video, functional safety or semi-static displays. The following topics are described with varying degrees of detail: links and sub-links, different transmission modes, variable refresh rates, peripheral to processor communications and compression.

How Universal Flash Storage is Enabling Edge-AI in Automotive and Industrial Applications

Bruno Trematore, KIOXIA Europe (Speaking on Behalf of MIPI Alliance and JEDEC)

Born out of the mobile industry, JEDEC Universal Flash Storage (UFS) is becoming the de facto flash storage solution within next generation automotive architectures. UFS is also projected to be a key enabler for many upcoming industrial applications that demand the highest data bandwidths.

This session begins by explaining why UFS has seen such rapid adoption within the automotive market, driven by the industry’s need for an ultra-reliable flash memory solution that supports the fastest data transfer speeds and lowest latency. This introduction also examines the synergies between automotive and industrial flash storage use cases, explaining how UFS will be a key enabler for many edge AI-enabled industrial use cases.

Next up, the session dives into the technology that underpins UFS, focusing on how the MIPI M-PHY physical layer interface and MIPI UniPro link layer interface provide UFS’s interconnect layer. The session explains how, in its most advanced release, UFS 4.1 leverages the most recent versions of these MIPI interfaces to double its interface bandwidth and enable up to ~4.2 GB/s for read and write traffic.

SoundWire When Compared to I2S and TDM

Saravana Kumar Muthusamy, Soliton Technologies

In the rapidly evolving landscape of audio technology, the need for efficient, scalable and cost-effective communication protocols has never been more critical. Traditional protocols like I2S (Inter-IC Sound) and TDM (Time Division Multiplexing) have served the industry well. While these protocols have been foundational, they present several challenges, including high pin count, limited scalability and suboptimal power efficiency. MIPI SoundWire is a protocol introduced by MIPI to overcome the limitations of these protocols while addressing the growing needs of audio technology. Here are some of the areas where MIPI SoundWire becomes advantageous over traditional protocols like I2S and TDM. A significant advantage of SoundWire over I2S and TDM is its reduced pin count, which could simplify the hardware design. Traditional protocols typically require multiple pins for data, clock and control signals, leading to increased hardware complexity and cost. SoundWire, on the other hand, uses just two pins (DATA and CLOCK) for communication. SoundWire’s ability to support configurable frame sizes allows developers to adjust the frame size based on the bandwidth requirements of the peripheral, reducing transport overhead and optimizing data transfer efficiency. SoundWire also brings several advanced features to the table, setting it apart from traditional protocols. These include interrupts, wake-up capabilities and event signaling. These features enhance the audio functionality.

Hardware for Edge AI

Multi-die Design for Edge AI Applications

Hezi Saar, Synopsys

Generative AI has become pervasive, enhancing the capabilities of the devices we use for inspiration, ideas and important decision making in our daily lives. To enable AI, large volumes of data must be processed at a fast rate, driving the industry towards multi-die designs and the adoption of high-bandwidth, low-latency interfaces such as UCIe, LPDDR, UFS and MIPI CSI. These interfaces must not only provide real-time connectivity but also meet system-level requirements for power, performance and area efficiency. This presentation explores the motivation behind the transition to multi-die designs and examines how die-to-die, memory, storage and MIPI interfaces interoperate to support large-scale AI-enabled systems. Additionally, it delves into various use cases of multi-die designs in edge AI applications.

Embedded Vision Interfaces

Precision Time Synchronization over MIPI Interfaces (Case Study)

Roman Mostinski, Mobileye

Precision time synchronization over MIPI interfaces is crucial in various applications that require coordinated timing across multiple components. Specifically, in the ADAS/AV application there are multiple real-time subsystems, like distributed image sensor, RADARs, LiDARs, various effectors and complex human-machine interfaces–for those the precision time synchronization is essential to facilitate data fusion and to synchronize events and actions across such systems. Also, precise timing helps ensure that data is collected and processed in a timely manner, reducing the risk of errors and excessive latency, and improving overall safety.

As systems become more complex, with more interconnected devices and heterogenous connectivity, maintaining timebase synchronization across all components becomes increasingly challenging. In environments where multiple devices from different manufacturers interact, common approaches for time synchronization may simplify the design and scalability of such systems and ensure that data can be seamlessly shared and understood across various platforms and applications.This presentation discusses requirements to synchronization accuracy in several applications and highlights potential challenges and possible improvements for existing and upcoming MIPI interfaces and protocols.

Electronic Displays Conference:

Sustainable Display & Interfaces

Genius in Simplicity: How MIPI DSI-2 Enables Highly Efficient Embedded Display Applications

Mohamed Hafed, Introspect Technology

With more microcontrollers supporting MIPI DSI-2 as their main display interface, many engineers are attracted to the concept of using cost-effective DSI-2 panels instead of expensive HDMI or DP panels. After all, DSI-2 supports breathtaking resolutions and frame rates with minimal power. However, there is a perception that the DSI-2 physical layers (D-PHY and C-PHY) are not able to drive cabled panels. The goal of this presentation is to evaluate DSI-2 practically. The presentation shares real measurements illustrating how system implementations can use DSI-2 panels with cables as long as the electrical properties of the specifications are met. It then delves into the advantages of DSI-2 at the protocol layer, showing how its simple implementation enables very low latency communication, while still offering rich visual experiences. The genius in DSI-2 is its simplicity, and this will be demonstrated in the context of advanced topics such as variable refresh rates, compression and more.